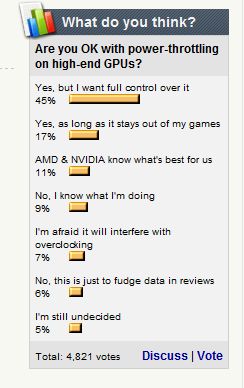

On techPowerUp there is currently a poll about the new power limiter we can find on all recent and high-end graphics cards (GeForce GTX 500, Radeon HD 6900). The majority of users are OK with these new power monitoring systems BUT only if they can have full control over the power limiter (enabling or totally disabling it).

I’m part of this majority. The power monitoring does not bother me as long as I can fully disable it when I need. AMD power limiter goes in that direction with PowerTune and even if we can’t totally disable it, we can control it a bit (+/- 20%) which is nice. I will overclock the HD 6950 DC2 I just received and I hope that AMD power limiter won’t prevent me to find the max OC settings. On NVIDIA cards, the story is different: on GTX 580 / GTX 570, the no-name power limiter is still enabled and you can’t change it. What’s more it’s based on an applications blacklist which really sucks! Just to hide a watt-greedy GPU 😉

But things seem to change because the power limiter is now optional on the new GeForce GTX 560 Ti (read: it’s up to the graphics card maker to implement it or not). And

guess what? ASUS has decided to not implement that power limiter on its GTX 560 Ti (I just received this confirmation) that’s why in my ASUS’s GTX 560 Ti review I managed to run FurMark at full speed without disabling the limiter with GPU-Z. Even major graphics cards maker don’t really like these power limiters.

Another cool thing would be that both NVIDIA and AMD to provide an power limiter API: applications would take the control over the power monitoring system (actually I don’t know if it’s a good thing but I like the idea)…

And you dear readers, what do you think?

Yes but I want full control over it! 🙂

So far, nor my 460 or my 570s have troubled me in any way, not it overclocking or anywhere.

Sorry but I am not a big fan of furry donuts! 😛

I meant not IN overclocking or anywhere :X

The thing ppl forget here is that those power monitors were introduced after Gfx cards got destructed when running Furmark. And that those critical situations couldn’t be detected on demand (as the CPU throttles when it gets too hot), because it’s not the heat that kills the GPU.

Instead it is the whole electric circuit itself that isn’t designed for such constant power drains. So when disabling the power monitor all parts of the circuit have to be improved to support those drains. And that w/o any performance increase in average applications.

Limitators are not usefull for me…

It just reflect the way nvidia or amd are trying to mask some lack of cooling important pieces of hardware on GFX like the GPU itself obviously but also the VRM’s….

In some hotter places like warm country …lack of cooling plus a huge TDP leads to epic failure ( nvidia with fermi aka thermi….)

all is about quality , r&d, thermal design , and using some good japan ceramic capacitor with a good vrm design … Or not …

And all is about money … Do you think” brand new GFX on enthusiast market , makers cares about your electric bill? So they tune “a la demande” the TDP for saving your money and our planet: the earth !

Or maybe is just bull shit marketing …

Good thermal design and good vrm design cost a lot … Remember and the power virus call furmark :d tha first one to block the TDP via software….

Now we re talking about hardmod: directly controlled by a chip …. It seems like customers are forced to be agree … Cuz u’ll got no choice nor alternative .

So I would like to see more manufacture like ASus removing this fearure because it is boring for high end users and overclockers….

My advice : always use alternative cooling , and maxin out TDP means for

Me means reaching max o/c without issues at all…

Remember crysis

Man, this power limiting thing sucks. If I were asked, it would be ABSOLUTELY OPTIONAL, no forcing and other shenanigans. Okay, if it’s already integrated in the chip, it should be controllable through graphic card’s control panel whilst providing option to fully disable power limiting and leaving GPU unrestrained.