UPDATE (2014.04.15): The scores of the AMD FirePro W9100 are available HERE.

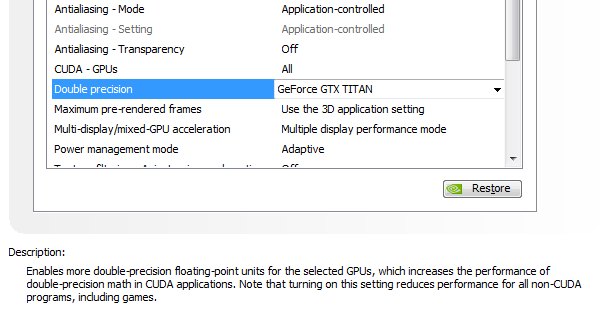

UPDATE (2014.03.06): there is an option in the NVIDIA control panel to enable or disable full speed FP64 support for the GeForce GTX Titan. By default this option is set to OFF. But full speed FP64 support comes with a price: FP32 performance is impacted as it’s described in the NVCPL:

Note that turning on this setting reduces performance for all non-CUDA programs, including games

I updated the GTX Titan scores:

– Julia FP32 score: 71432 points (1189 FPS)

– Julia FP64 score: 31137 points (518 FPS)

When FP64 is enabled, the Julia FP64 score is this time correct and is around 1/3 FP32 (FP32 when FP64 is OFF). But when the FP64 is ON, FP32 performance drops by around 15%.

|

|

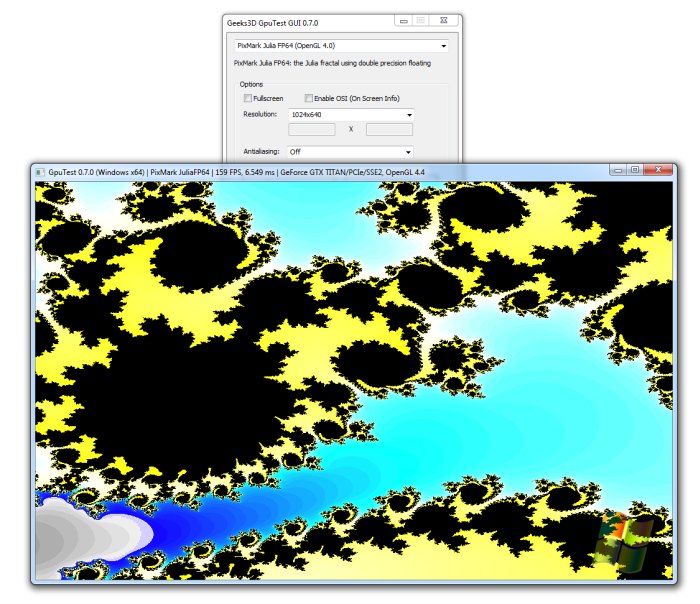

In the freshly released GpuTest 0.7.0 (for Windows, Linux and Mac OS X), we can find a Julia fractal rendered in GLSL using double precision floating point (or FP64) numbers. The Julia FP64 test requires an OpenGL 4 capable GPU and the support of the GL_ARB_gpu_shader_fp64 extension.

The Julia fractal is also available with FP32 (single precision floating point) numbers. That allows some comparisons like the famous ratio between FP32 and FP64 we can read in many reviews(fp64 = 1/xx fp32)… |

GpuTest 0.7.0, Julia FP64 OpenGL 4.0 test – GeForce GTX Titan

Julia FP32 – Benchmark settings: 1280×720, windowed, MSAA=Off, 60 seconds

| 106391 points (1771 FPS, Windows, Cat 14.2 beta) – Radeon HD 7970 |

| 83324 points (1386 FPS, Windows, R334.89, ***FP64 OFF***) – GeForce GTX Titan |

| 71432 points (1189 FPS, Windows, R334.89, ***FP64 ON***) – GeForce GTX Titan |

| 44105 points (734 FPS, Linux) – Radeon HD 7770 |

| 42946 points (715 FPS, Linux, R319.32) – GeForce GTX 680 |

| 24293 points (404 FPS, Windows, Cat 14.2 beta) – Radeon HD 6970 |

| 23768 points (395 FPS, Windows) – GeForce GTX 750 Ti |

| 6586 points (109 FPS, OSX 10.9) – GeForce GT 650M |

Julia FP64 – Benchmark settings: 1280×720, windowed, MSAA=Off, 60 seconds

| 40885 points (680 FPS, Windows, Cat 14.2 beta) – Radeon HD 7970 |

| 31137 points (580 FPS, Windows, R334.89, ***FP64 ON***) – GeForce GTX Titan |

| 12453 points (207 FPS, Windows, Cat 14.2 beta) – Radeon HD 6970 |

| 7331 points (122 FPS, Windows, R334.89, ***FP64 OFF***) – GeForce GTX Titan |

| 5037 points (83 FPS, Linux, R319.32) – GeForce GTX 680 |

| 4622 points (76 FPS, Linux) – Radeon HD 7770 |

| 1872 points (31 FPS, Windows) – GeForce GTX 750 Ti |

| 1041 points (17 FPS, OSX 10.9) – GeForce GT 650M |

With current graphics drivers (Catalyst 14.2 beta for AMD Radeon and R319/R334 for NVIDIA), AMD Radeon GPUs are faster than NVIDIA GPUs: in FP32, the Radeon HD 7970 is +27% faster than the GeForce GTX Titan. And the gap is much more important in FP64 where the HD 7970 is 6X faster than the GTX Titan.

If we look at this GFLOPS comparative table, the ratio between FP32 and FP64 is more or less ok for the Radeon HD 7970 (around 1/3 FP32 while the official/marketing ratio is FP64 = 1/4 FP32) but is meaningless for the GTX Titan: the official ratio is FP64 = 1/3 FP32 while in this test, the ratio is rather FP64 = 1/11 FP32. Same thing for the GTX 750 Ti (Maxwell) and for the GTX 680.

In full HD 1920×1080 resolution, the ratio stays the same (FP64 = 1/11 FP32 for the GTX Titan, see HERE and HERE).

Possible causes of this sluggishness in FP64:

- the GeForce driver (the OpenGL part) is not optimized for this particular use case.

- there is something wrong in the GLSL code of the Julia fractal that slows down GeForce GPUs. The Julia fractal used in GpuTest is based on this article.

- GTX Titan FP64 performance is not 1/3 FP32

- something limits the fp64 performance inside the GeForce driver…

- other???

MAIN REASON: full speed FP64 is disabled by default on GTX Titan. FP64 can be enabled in NVIDIA control panel.

Strange… Let me hazard a guess : did you enable the double precision option in the control panel ? It has to be explicitly enabled to allow full speed FP64 on Titan. By default Titan is restricted to 1/24 performance, like the GTX 680

I heard that there is some option in NVidia drivers that should switch between game performance\full FP64. Did you switch it?

My GeForce 660 driver 334.89

35788 595fps

3119 51fps

595/51 = 11 so 1/11 too. Maybe that’s driver, I don’t know

@JeGX:

GTX Titan MUST be ALWAYS faster than any Radeon!! Therefore, please immediately remove your abnormal results because GTX Titan series are very expensive!

nuninho1980

results are definitely abnoraml because my not so expensive GF660 should have 1/24 while it’s 1/11. I’m happy 😀

At 720p running same OS and same GFX driver, my GTX 480@reference-stock got:

FP32: 24124 pts (401 fps)

FP64: 6508 pts (108 fps)

In any Fermi card – FP64 is almost 1/4 than FP32 while Kepler – 1/11.

The double precision switch is in the NVCP for the Titan!

In any Fermi card – FP64 is almost 1/4 slower than FP32. Sorry for my mistake.

Ah… Running GTX Titan, “OpenGL 4 FP64 test” is abnormal, like AIDA64 GPGPU benchmark. 😉

Ran the Julia benchmarks on my a10 7850k,using the HSA beta drivers:

Julia FP32: 22343 points (FPS: 371)

Julia FP64: 2552 points (FPS: 42)

GPU oc’ed to 800Mhz, using 2133MHz RAM. CPU oc’ed to 4.3GHz.

7870 with 14.2, GPU: 1250MHz / Mem @ 1375MHz:

Julia FP32: 95808 points (FPS: 1594)

Julia FP64: 9947 points (FPS: 165)

GTX 780 Ti 1245 X 3850 Mhz – 103184

To add to the mix, my GTX 780 Ti Classy (stock) PCI-E 2.0 x16 using driver 334.89 and Win7 SP1

FP32 = Score: 91709 (FPS: 1526)

FP64 = Score: 8458 (FPS: 140)

@Fedy @lowenz: let me check that, I hope it’s a joke….

@Fedy @lowenz: I found this option! this is just not possible! Why does this option exist? I will do some bench and update the article.

* GTX580 @ 825/4200MHz;

* Win 8 x64, 334.89 driver;

* 1280×720, windowed;

FP64: 8070pts / 134FPS

FP32: 29199pts / 485FPS

@JeGX: This option exists because when you turn it on, the driver downclocks the GPU to 850MHz and disable turbo to keep the TDP in check.

So they decided to let the user chose what they prefer.

@JeGX:

Results have updated – Ok. 🙂

But look with attention: “(122 FPS, Windows, R334.89, ***FP64 ON***) – GeForce GTX Titan” – bad because ***FP64 ON*** is bad but yes ***FP64 OFF***. 😉

Poor performance for Kepler because OpenGL hasn’t CUDA support but yes OpenCL. Very likely NV doesn’t care OpenCL and cancels to fix OCL issue. Therefore, please you must add support CUDA for your OpenGL 4 FP32/FP64.

lol you should’ve known that Titan has two different modes switchable through driver, I’m surprised you didn’t, there are a lot of articles about it 😛 Game mode is for people who don’t need GPGPU and use Titan only for gaming.

@nuninho1980: updated, thanks!

@Fedy: I will consider FP64 for my next benchmarks. Thanks for your explanations.

@John Smith: to be honest, I didn’t read many reviews and articles these last months, I suffer from a terrible lack of time… The important thing is that the title of this article is still true 😀

for me results are: JULIA FP64 Score: 4557points

FPS 75 1024×640 windowed AA:Off

GTX560 + Q9550 + Win 8.1 with Nvidia Quadro beta 334.95 Modded inf for Geforce GTX560

here is the Titan switch in Linux flavor

http://choorucode.com/2013/12/05/how-to-enable-full-speed-fp64-in-nvidia-gpu/

Thanks for the link Stefan!

@JeGX: Can you add CUDA support?? But CUDA support added is REQUIRED. Because OpenCL 1.1 performance is abnormal and is 1.5x slower than OCL 1.0, for NVIDIA only.

Actually there should be Titan Black, same price and one additional SMX + higher clocks.

Interesting app!

@nuninho1980:

This app is a benchmark. So it is supposed to test the actual performance of the GPUs running the same code. Would you look at a benchmark in which the products are compared with different tests protocols? Certainly not. So I think that JeGX should not add cuda support…

If opencl drivers are bad with geforce gpus, then nvidia should work on the drivers. But JeGX is not the one who should work to make their product shine…

Ziple

NVidia don’t want to work on OpenCL because they have CUDA. This is marketing war and 2/3 users have NVidia cards so JeGX should add CUDA if he’s not paid by AMD

I’m not paid by AMD nor by NVIDIA (that would be cool but it’s not the case). I’m totally independent. I don’t understand why we’re talking about OpenCL / CUDA here. I just did a simple OpenGL 4 (and from what I know, both AMD and NVIDIA support OpenGL) test with the FP64 feature exposed by this universal API. With current drivers, HD 7970 is faster than GTX Titan (in this particular test) and that’s all.

Maybe in few weeks, NVIDIA’s GPU will be faster than AMD one. Now regarding CUDA or OpenCL. CUDA is certainly an extremely powerful GPU computing

solution but today I try to code cross-platform apps that work on NVIDIA, AMD and Intel GPUs. So if I had to code now a GPU computing benchmark, I would do it using OpenCL. And I’m sure (I hope!) that one day NVIDIA will release a super OpenCL driver. But I never said that I won’t code a CUDA benchmark. It would be even interesting to compare the same algorithm in CUDA and in OpenCL on different hardware (hummm, my brain is already thinking to a possible bench, aargghh!). Coding, releasing and updating a soft takes time (and more when it’s cross-platform) so be patient, and maybe one day you will read on Geeks3D a new about a new cuda benchmark… Good night!

@Ziple: tu devrais commenter plus souvent 😉

@John Smith

I’am a geek, I don’t care about marketing stuff :). CUDA is a great piece of technology (I use it everyday), but here it’s OpenCL only (for now). It’s only that, not any conspiracy involved…

@JeGX: J’essaierai 🙂

Great bench JeGX, as always.

7950 beats the Titan as well 😉

7950 @ 1250/6000MHz

OS X 10.9.3 beta

1280×720, windowed

FP32: 94286 pts / 1571 FPS

FP64: 39980 pts / 665 FPS

@blacksheep: Ok but GTX Titan (CUDA) would ALWAYS beat any AMD-ATi (OCL), if OpenGL will use CUDA because GeForce card continues get abnormal performance since the “poor” optimization of OpenCL 1.1 driver for GeForce.

nuninho1980

this is OpenGL FP64 test, not OpenCL so this is NVidia’s OpenGL driver issues, probably.

@nuninho1980: I have no doubt that Titan is technically capable to do it, but seems that John Smith is right about the driver thing.

My previous scores was from latest OS X beta, reran benchmarks in Win7 and my 7950 has beaten 7970 too:

7950 @1250/6000MHz

Win7 x64, Catalyst 13.12

1280×720, windowed

FP32: 124914 pts / 2079 FPS

FP64: 48867 pts / 813 FPS

It’s a shame that OS X drivers are that poor 🙁

@John Smith: “this is OpenGL FP64 test, not OpenCL…”

YES, it has OpenCL! Because look “plugin_gxl3d_opencl_x64.dll” file in “drive-letter:\…\GpuTest_Windows_x64_0.7.0\plugins”. lol.

@up – read more carefully

“we can find a Julia fractal rendered in GLSL using double precision floating point (or FP64) numbers”

GLSL != OpenCL

GLSL = OpenGL

If it was CL kernel it surely wouldn’t be “GLSL” and also would not require that GL_ARB_gpu_shader_fp64 particular extension.

gputest alone probably uses OpenCL somewhere, but not in this test.

So I guess NV cut FP64 performance in that particular area very much too since I am able to get some 3700pts at default settings with 7750 @FP64 which is way more than GTX750

It’s a pure OpenGL test (GLSL shaders with fp64 variables), there’s no OpenCL kernel involved in the rendering. The OpenCL plugin is currently used only with the GPU monitoring plugin to provide information for the GPU database.

GeForce 337.50beta driver backs to normal performances for LuxRender (OpenCL). 🙂

I tried Julia test but still same performance – yeah due to no OCL used but yes OGL used. 😉

OOH! “LuxRender” – bad but yes LuxMark. Sorry. 😉

Got R9 280x yesterday and have just tested it with Cat 14.6 beta. My videoboard is XFX Black with overclocked chip 1080 instead of 1000 for 7970. I got 128273 points, 2135fps for FP32 and 47934 points, 797fps for FP64