In this news, we learnt that the current version of the PhysX engine was compiled with the x87 instruction set and is not using modern sets like SSE2. I found here a simple ready-to-use matrix multiplication code sample that will allow us to see the speed difference between x87 and SSE/SSE2 sets.

I compiled the following code sample with visual c++ 2005:

#include#include #pragma comment(lib, "winmm.lib") #define DIM 4 void mul_mat(double **mat1, double **mat2, int sz) { int ii, jj, kk; for (ii = 0; ii < sz; ii++) { double temp[DIM]; for (jj = 0; jj < sz; jj++) { temp[jj] = 0.; for (kk = 0; kk < sz; kk++) temp[jj] += mat1[ii][kk]*mat2[kk][jj]; } for (jj = 0; jj < sz; jj++) mat1[ii][jj] = temp[jj]; } } int main() { const int sz = 4; const int num = 1000000; int ii, jj; double **mat1 = new double*[sz]; for (ii = 0; ii < sz; ii++) mat1[ii] = new double[sz]; double **mat2 = new double*[sz]; for (ii = 0; ii < sz; ii++) mat2[ii] = new double[sz]; for (ii = 0; ii < sz; ii++) for (jj = 0; jj < sz; jj++) { mat1[ii][jj] = double(ii)/sz*double(jj)/sz; mat2[ii][jj] = double(ii)/sz*double(jj)/sz; } printf("\nStarting matrix multiplication loop..."); DWORD start = timeGetTime(); // // Main matrix loop: // for (ii = 0; ii < num; ii++) mul_mat(mat1, mat2, sz); DWORD end = timeGetTime(); printf("\nElapsed time: %d ms\n", end-start); return 0; }

As the author of this code says it, it’s a very artificial scenario, but it shows well the difference between the differents math instruction sets.

I limited the main loop counter to 1 million of matrix multiplications which is enough.

In the vs2005 project properties, I set Optimization to Minimize Size (/O1) and changed the Enchanced Instruction Set to test the different sets.

You can download the test pack here:

[download#141#image]

There are three exe in the pack: x87_test.exe, sse_test.exe and sse2_test.exe. I added a batch file for each exe in order to have a pause at the end.

Test 1 – Instruction Set: No Set or x87

– Elapsed time: 2373 ms

Test 2 – Instruction Set: SSE

– Elapsed time: 2368 ms

Test 3 – Instruction Set: SSE2

– Elapsed time: 1112 ms

No doubt, SSE2 is the way to get fast math. A recompilation of the PhysX engine with SSE2 instruction set would be very nice. But as I said in this news, a simple recompilation might lead to some incorrect calculation results, so NVIDIA will have to test such a recompilation before, which may take some time…

Here is the assembly output of the core of matrix multiplication of the different instruction sets:

temp[jj] += mat1[ii][kk]*mat2[kk][jj];

Set: No Set

00431A89 mov eax,dword ptr [ii] 00431A8C mov ecx,dword ptr [mat1] 00431A8F mov edx,dword ptr [ecx+eax*4] 00431A92 mov eax,dword ptr [kk] 00431A95 mov ecx,dword ptr [mat2] 00431A98 mov eax,dword ptr [ecx+eax*4] 00431A9B mov ecx,dword ptr [kk] 00431A9E mov esi,dword ptr [jj] 00431AA1 fld qword ptr [edx+ecx*8] 00431AA4 fmul qword ptr [eax+esi*8] 00431AA7 mov edx,dword ptr [jj] 00431AAA fadd qword ptr temp[edx*8] 00431AAE mov eax,dword ptr [jj] 00431AB1 fstp qword ptr temp[eax*8] 00431AB5 jmp mul_mat+68h (431A78h)

Set: SSE Set

00431A89 mov eax,dword ptr [ii] 00431A8C mov ecx,dword ptr [mat1] 00431A8F mov edx,dword ptr [ecx+eax*4] 00431A92 mov eax,dword ptr [kk] 00431A95 mov ecx,dword ptr [mat2] 00431A98 mov eax,dword ptr [ecx+eax*4] 00431A9B mov ecx,dword ptr [kk] 00431A9E mov esi,dword ptr [jj] 00431AA1 fld qword ptr [edx+ecx*8] 00431AA4 fmul qword ptr [eax+esi*8] 00431AA7 mov edx,dword ptr [jj] 00431AAA fadd qword ptr temp[edx*8] 00431AAE mov eax,dword ptr [jj] 00431AB1 fstp qword ptr temp[eax*8] 00431AB5 jmp mul_mat+68h (431A78h)

Set: SSE2 Set

00431A91 mov eax,dword ptr [ii] 00431A94 mov ecx,dword ptr [mat1] 00431A97 mov edx,dword ptr [ecx+eax*4] 00431A9A mov eax,dword ptr [kk] 00431A9D mov ecx,dword ptr [mat2] 00431AA0 mov eax,dword ptr [ecx+eax*4] 00431AA3 mov ecx,dword ptr [kk] 00431AA6 mov esi,dword ptr [jj] 00431AA9 movsd xmm0,mmword ptr [edx+ecx*8] 00431AAE mulsd xmm0,mmword ptr [eax+esi*8] 00431AB3 mov edx,dword ptr [jj] 00431AB6 addsd xmm0,mmword ptr temp[edx*8] 00431ABC mov eax,dword ptr [jj] 00431ABF movsd mmword ptr temp[eax*8],xmm0 00431AC5 jmp mul_mat+70h (431A80h)

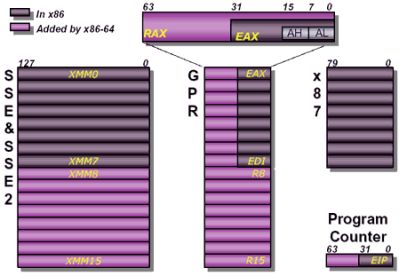

No Set and SSE set have the same code (fp87 instructions????) but SSE2 code really uses another codepath with the use of SSE registers such as xmm0.

In my experience auto-vectorization (compiler generates SSE code automatically) doesn’t really work that well. This means that even if you tell the compiler to generate SSE(2/3/4) you won’t always get SSE instructions. SSE code needs to be coded by hand (preferably with intrinsics) and often requires some modification to adapt the code to SIMD instructions.

Only in very simple scenarios can the compiler do this automatically.

My results Core i7 stock

X87:2090

SSE:2050

SSE2:1170

I meant core i7 860 stock 😛

some months ago I also did some tests.

I tested for matrix sum, subtraction and multiplication by a scalar, for both 64 a 32bits targets with compiler optimizations and hand-tuned assembly.

turned out that the fastest results showed up with compiler optimizations in 64bits mode.

here are the sepcs of the machine that I used:

Processor: Intel Core 2 Duo T7700 @ 2.4GHz

Memory: 4GB DDR2 667MHz

OS: Windows 7 x64 – Professional

the compiler was the one from visual studio 2010 (beta 2 in that time)

and here is the source code with the screen shots of the tests:

http://paginas.fe.up.pt/~ei06120/rs/lib/exe/fetch.php?media=downloads:amun_engine-matrix_profiling.zip

PhII @3.63 GHz:

x87 : 546ms

SSE : 546ms

SSE2: 811ms

WTF ? Pls explain…

PhII X4 @ 3.63 GHz ;). Sorry…

Why doesn’t anyone use SSE3/4, vs. SSE2? I mean SSE2 is old as is SSE and x87. Or is it because SSE2 matrix math is just as fast as SSE3/4’s?

Thanks!

@Mars_999: good question! For my part, SSE and SSE2 are the only instructions sets available with vs2005. And I just looked at vs2010 and only SSE/SSE2 are available too. Actually, I think the Intel C++ Compiler is required to get SSE3/4…

Pingback: Anonymous

So we are forced to do hand coded assembly for SSE3/4 in MSVC++? Does GCC support it?

quote: No Set and SSE set have the same code (fp87 instructions????) but SSE2 code really uses another code path with the use of SSE registers such as xmm0.

This is normal, SSE instructions set only support 32 bits floating point numbers (float) not 64 bits (double) like SSE2. So the compiler have no choice to use the fp87 instructions set in this case.

@Mars_999: SSE3 & SSE4 are _extensions_ of the older SSE implementations, so they don’t replace SSE2 functions with faster ones, instead they add new ones. So it depends on your code if any SSE is feasible for you. And Vector/Matrix math is mostly covered with SSE & SSE2.

@Mars_999: you are not forced to use assembly for SSE3/4.x in MSVC++ you can use the intrinsics functions.

check the header xmm.h

as I noticed that MSVC 2010 (and 2008) used SSE2 even with No Set. I used a very simple code, like cycle with x=sin(i*M_PI/180), and I always had SSE.

I changed a little bit the code (removed the windows part) and tested on a puny atom.

Instead of using the timeGetTime function I have used the linux time command, the result is the time that the program spend in user space. This will count also the initialization but is on a 0.00001% of the whole time. An acceptable error.

Test1: No SSE No optimization

gcc matrixTest.cpp -o matrixNoSse -lstdc++

result -> 8.757s

Test2: No SSE with optimization

gcc matrixTest.cpp -O3 -o matrixNoSse -lstdc++

result -> 3.004s

Test3: SSE2 No optimization

gcc matrixTest.cpp -msse2 -mfpmath=sse -o matrixSse -lstdc++

result -> 5.875s

gcc matrixTest.cpp -O3 -msse2 -mfpmath=sse -o matrixSse -lstdc++

result -> 2.996s

Similar results if I compile enabling SSE3 and SSE4

A big improvement without optimization, but with optimization with or without SSE is basically the same time.

Some explanation? Probably the atom exec the sse instruction quite slowly.

By the way, watching the assembly the SSE version is a lot shorter. 🙂

AMD Athlon X2 2.2Ghz

x87: 964ms

SSE: 964ms

SSE2: 1312ms

I can’t see boost here

e7400 stock

x87 2511

sse 2545

sse2 1170

With a Phenom II X4 p40BE @ 3GHz :

X87 : 668 ms

SSE : 667 ms

SSE 2: 993 ms

Like Rares, I would like to understand !?

Are the Phenoms absolutely bad with SSE 2 (and that would explain why they are not as good as the Intel CPU on games or video compression for exemples) ?

Pingback: [GPU Tool] FluidMark 1.2.2 Updated With PhysX SDK 2.8.4.4 - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

With Athlon (1) X2 @ 2,3ghz (brisbane g2)

x87: 978

SSE: 996

SSE2: 1287

LOL?

With athlon II X4 3,1ghz

x87: 630

SSE: 650

SSE2:960

That not looks too good… i think there is a problem in the code, because if SSE2 is really slower than x87 it would be completely unnecessary, and shouldn’t be even implemented to amd cpus. But it’s implemented and used nearly in everything, and intel disabled SSE2 usage in their compiler when the compiled program runs on amd, and obviously if it would be really slower they wouldn’t disable it, because their goal was to make programs run slower on amds, and not faster.

LOL, didn’t you AMD users noticed that your ‘slower’ CPUs, even an Athlon X2 @2.2Ghz had been 2x faster than the ‘faster’ Intel CPUs such like a Core i7 860 @stock when doing x87?

My Core 2 Duo @2.1GHz results:

x87: 3469 ms

SSE: 3416 ms

SSE2: 1569 ms