Morphological Anti-Aliasing from Pixel Bender Filters: MLAA

With the launch of new Radeon HD 6870 (and HD 6850) series and new Catalyst 10.10a, the web if flooded by MLAA which is the acronym of MorphoLogical Anti-Aliasing. But what is MLAA and how to use it / enable it?

What is the MLAA?

In short, MLAA is a shape-based anti-aliasing method that uses post processing filters to reduce aliasing. Like the SSAO, MLAA can be hand-coded in a 3D app or added in graphics drivers.

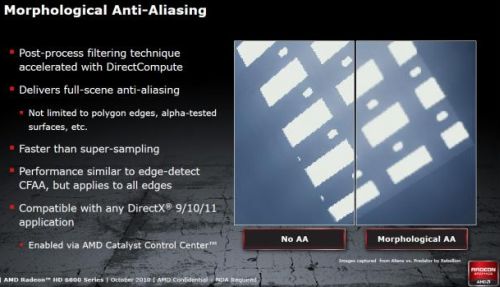

The following slide gives us an introduction to MLAA:

Here is the MLAA in action:

FINAL FANTASY XIV – No AA – (source)

FINAL FANTASY XIV – With MLAA

Now some links with more technical information about MLAA:

- Morphological Antialiasing @ realtimerendering.com

- Morphological Antialiasing (PDF) by Alexander Reshetov (Intel).

MLAA is designed to reduce aliasing artifacts in displayed images without casting any additional rays. It consists of three main steps:

1. Find discontinuities between pixels in a given image.

2. Identify predefined patterns.

3. Blend colors in the neighborhood of these patterns - Morphological Antialiasing on GPU. The presentation is available here: Practical morphological antialiasing on the GPU. You can download the demo with source code and GLSL shaders HERE that show the implementation of MLAA.

Morphological antialiasing is a recently introduced filter-based approach to antialiasing which does not require to compute additional samples. Due to bandwidth costs it is often too expensive to add a CPU post process to a real time application, pushing the need for a GPU implementation. However, the algorithm is a non-linear filter, which implies heavy branching and image-wise knowledge for each sample, making it difficult to port smoothly on the GPU. We redesigned the algorithm using multiple passes including a new line length detection method, and area estimation using precomputed tables. We obtain high framerates even on medium-range hardware at high resolutions. Our complete implementation is freely available.

How enable MLAA with Catalyst drivers?

MLAA support is provided by the Catalyst 10.10a (hotfix) and is automatically enabled on Radeon HD 6800. But it’s disabled on Radeon HD 5000. Why? Only AMD knows the answer…

How to enable MLAA on Radeon HD 5000 series?

Two ways:

1 – Windows registry (not tested yet – if someone can confirm…)

Add (if nor present) the key “MLF_NA” to

HKEY_LOCAL_MACHINE \ SYSTEM \ ControlSet001 \ Control \ Class \ {4D36E968-E325-11CE-BFC1-08002BE10318}000

and set the value 0 to MLF_NA to enable MLAA. Then Reboot the PC.

2 – Tweaking the Cat 10.10a installation files

Just download this file: C7106976.txt.

Go in

C: \ AMD \ Catalyst_8.782.1RC5_Win7_MLAA_Oct21 \ Packages\ Drivers \ Display \ W7_INF \

and replace all the content of C7106976.inf by the content of C7106976.txt. Or delete C7106976.inf and rename C7106976.txt in C7106976.inf, as you want 😉

Do the same thing for

C: \ AMD \ Catalyst_8.782.1RC5_Win7_MLAA_Oct21 \ Packages\ Drivers \ Display \ W76A_INF \

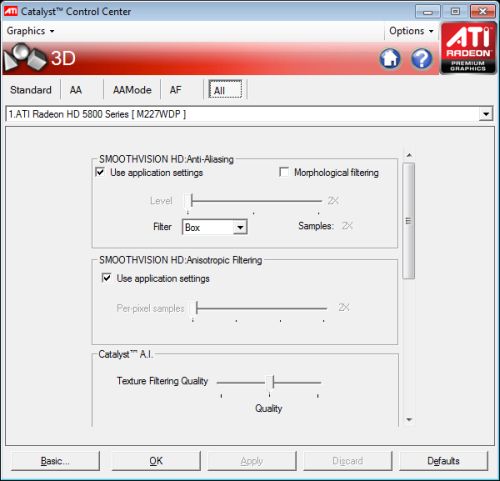

Now you’re ready to install the Cat10.10a. Then install it. At the end of the installation, reboot Windows. And if everything went well, you should see MLAA in CCC:

MLAA + Cat 10.10a + HD 5870!

Thanks to PCGHX for the trick.

im not convinced this is anything special..it just looks like its bluring the edges of the final image..another one of the graphics rendering fudge factors i think

very nice beautiful thanks for the trick 🙂

I must admit it’s too much blurred for my taste

It didn’t even install, I tried the official hotfix, it installed – and nothing after reboot. Basic style. Then tried to modify as shown and it disn’t install either. 5870 card.

its great at very high res – where “normal” AA takes a massive hit, morphological AA doesnt so much, and any blurring effect is much less obvious.

at lower res’s might as well use normal AA (if you can, ie its not GTA4)

its good, as it stops nvidia blocking AMD cards using AA like they did in batman, and will probably do in other games. good stuff amd, its ****ing ace in high res eyefinity.

This method isn’t new. It was/is the only way to do AA in Deferred Shading Renderers. But this implementation is really one of the worst I have seen because you usually take the pixeldepth (and normal) into account and just blurrs on geometry edges. AMD instead blurrs on colorspaces edges, causing blurred GUIs and missing detail on textures.

Somehow this reminds me a bit of the N64 😉

jK: This is not the only way to do AA in deferred shading. First of all, there is edge-AA (most probably you’re talking about that one). Morphological AA is a bit different. Also, you can do MSAA with deferred shading as we have sample shading and multisampled textures since DX10.1.

Beside that, MLAA in fact does not blur the image but rather smooths edges based on predefined morphological patterns (do some research about morphological image processing algorithms).

Anyway, even though MLAA somewhat has a little bit of side effect in case e.g. texts where it seems like it curves the font more than it should but I really think this is a great technique and is much, much faster than MSAA.

I know it was used already in some apps, however having it in the driver enables us to use it for apps that don’t support it by default.

“Also, you can do MSAA with deferred shading as we have sample shading and multisampled textures since DX10.1.”

No sane developer will use MSAA alongside deffered shading regardless of Dx version. There are almost always some amounts of graphical anomalies by doing so.

Squall Leonhart:

Yes, in fact people don’t really use MSAA alongisde deferred shading but only because it eats up trendemous amount of memory and bandwidth. However, it is possible and with custom resolve shaders you don’t have any graphical anomalies as you said as you don’t simply blend together the samples of e.g. normals and such. You can fetch individual samples from the multisampled texture thus it works fine just it hits performance.

“This is not the only way to do AA in deferred shading.”

That’s why I said “was/is”. Also I didn’t meant the implementation itself, I meant AA as a post-screen FX. So depending on your target hardware it still is the only solution to realize AA with Deferred Shading.

And I know that MLAA is a bit more than `blurring`, but the results are the same: it reduces the detail of the image. Also MLAA itself reminds me more of those interpolation methods used for upscaling in those video console emulators, but their problem is a bit different, so they don’t have any other solution (also they increase the resolution, so they can even add `detail`).

Here instead, you have a depthbuffer (and on engine level the normal too) and could control the FX much better than it is done.

I got somehow by the “was/is” so sorry and don’t take my comment offensive. I just want to emphasize that morphologic algorithms are a completely different approach than old-style methods (like edge based blurring and so).

I don’t know what type of MLAA Ati are using, but there are several variants of different quality:

1) Intel original MLAA

2) MLAA in God of War 3 (apparently nicer – runs on SPUs)

3) MLAA on the GPU as per the above GLSL demo – I don’t like the quality of it or the speed of it compared to the others

4) A MLAA on GPU for “GPU Pro 2” http://www.iryokufx.com/mlaa/ Unknown quality comparison, but speed seems good.

Too much blur for me, looks like instead of gain we loose details.

Pingback: How to enable MLAA on 5xxx series cards with Catalyst 10.10a Hotfix - Overclock.net - Overclocking.net

rant: ” its good, as it stops nvidia blocking AMD cards using AA like they did in batman, and will probably do in other games. ”

Despite the “common knowledge” quip above, Nvidia did not block ATI AA in Batman, what happened was ATI failed to produce the programmers to make AA work for ATI cards in Batman.

PR honcho Ruddy at Hexis claimed, after whining about “being blocked” that he had his BEST PROGRAMMER working with Batman’s producer and would make sure AA was delivered, because he knew ATI fans were counting on it.

The big ‘ol interview is still posted at Hexus, just take a look.

What did happen was for months and months instead of simply CODING AA for ATI by ATI programmers, ATI with types like Huddy decided they could produce a far bigger bounty with innuendo and hatred they could stir up against the competition.

Of course, people being not too bright nowadays, fulfilled the Huddy ATI hack job attack, which of course is much easier and more satisfying than actually PAYING PROGRAMMERS to DO THE WORK REQUIRED for your cards to function well in games.

Same thing started to occur with Starcraft 2 – Nvidia announced it’s AA working, and ATI was flat on it’s feet with nothing – unable to PAY $$$ programmers when the AMD parental unit has unfit and in debt finances…

Instead of pulling the same thing this time, they HAD TO PRODUCE, so ATI announced it would not release AA until “they were satisfied with it’s performance”.

Yes, they certainly wanted to pull another fast one, but the pressure was too great this time, and the trick likely wouldn’t work again.

Nonetheless, it is now “common knowledge” ( even though it’s entirely FALSE ) that Nvidia “blocked AA” for ati in Batman Ark Asy.

LOL

Yes indeed, ATI is a dirty company, with a criminal CEO gone from it’s head, and lies and PR stunts work with the hyper red fanbase – they HATE “the questionable business practices” of their nemesis and “competitor” Nvidia, and any lie will do.

I found it a shame that people are so thoroughly ignorant and blind that they bought the lies of ati’s Huddy.

ATI (AMD now) CAN’T PAY for enough programmers to get their drivers right.

When HUNDREDS travel about for Nvidia to the gaming studios, a FEW do the same for ati.

That is DOCUMENTED and FACTUAL.

Since ATI’s budget is so bleak, it’s easier and cheaper, and “more productive” to blame the competition rather than actually produce the driver to fix the problem.

This new Morph AA is another trick – a “console port” style cheesy and cheap AA, and at least it is something, since 32XMSAA is still an Nvidia exclusive – after all this time.

SiliconDoc: I see you are spreading your FUD and lies everywhere nowadays. Take some rest, have a vacation and come back as a happier person. None believe your crap anyway, so why bother?

Pingback: sapphire 6870 no mlaa? - Overclock.net - Overclocking.net

Still cant get it to work with my HD 4870. Think AMD would give us MLAA as we need it more then HD 5000 and 6000 users…

@SiliconDoc

What a bunch o´ cr@p.. (did´t read all) u seem suspicious! are u working for “ENVIDIA” (spanish word)

Pingback: Graphics Tweaking for Second Life « Nalates' Things & Stuff Blog

Pingback: SRAA (Subpixel Reconstruction Anti-Aliasing): NVIDIA’s reply to AMD’s MLAA - 3D Tech News, Pixel Hacking, Data Visualization and 3D Programming - Geeks3D.com

Just alittle heads up on the Catalyst MLAA, I’m running 11.2 drivers ( HD5870 ) and although I notice little differance with MLAA on it does, however, provide a side-effect with Text appearing disjointed and sometimes unreadable on a large screen. Simple solution is to just alt+tab out, then back in to fix it. However doing this for all games soon becomes tiresome and I’d rather just stick AA on.

@SiliconDoc

COOL STORY BRO!!!

(now can you give us proof so we can believe you)

LOl @ all the people bitching about Batman. Batman didn’t have antialiasing support because the engine it was built on did not support AA. Batman was a piece of shit game, anyway.

@SiliconDoc

Wow, get a life. Personally I am not convinced about MSAA yet either though its now got native support in DEUS EX released this week and it seems to run very well indeed.

MLAA I meant lol.

Could be worse, could be super sampling. Now that is old fashioned crap. Deus Ex is a good game for comparing different AA types though.

Very impressed

I think with advancing to MLAA and lowering framebuffer callback

we can move to threaded fragment output(future muti-threaded ROPs transmission protocol)instead of framebuffer outputs and seprate VRAM(framebuffers) from graphic cards to future monitors

this means:

lower power consumption

flexible framebuffer memory on monitors

better (crossfire,SLI) support

space saving