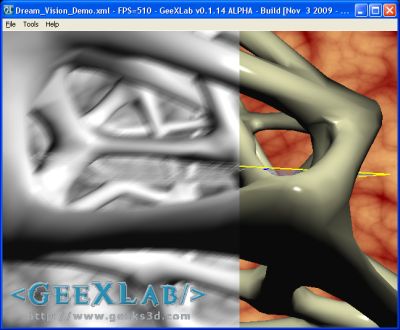

This new shader, from Geeks3D’s shader library, shows the kind of vision one may have in a dream…

You can grab the GLSL shader and the demo here:

[download#109#image]

This demo requires GeeXLab and does not use Python so you can use the version of GeeXLab without Python.

Unzip the source code somewhere, start GeeXLab and drop the file Dream_Vision_Demo.xml in GeeXLab.

Shader desription

Language: OpenGL 2 – GLSL

Type: Post processing filter.

Inputs

- sceneTex (sampler2D): the final scene image.

Ouputs: color buffer

Shader code:

[Vertex_Shader]

void main()

{

gl_Position = ftransform();

gl_TexCoord[0] = gl_MultiTexCoord0;

}

[Pixel_Shader]

uniform sampler2D sceneTex; // 0

void main ()

{

vec2 uv = gl_TexCoord[0].xy;

vec4 c = texture2D(sceneTex, uv);

c += texture2D(sceneTex, uv+0.001);

c += texture2D(sceneTex, uv+0.003);

c += texture2D(sceneTex, uv+0.005);

c += texture2D(sceneTex, uv+0.007);

c += texture2D(sceneTex, uv+0.009);

c += texture2D(sceneTex, uv+0.011);

c += texture2D(sceneTex, uv-0.001);

c += texture2D(sceneTex, uv-0.003);

c += texture2D(sceneTex, uv-0.005);

c += texture2D(sceneTex, uv-0.007);

c += texture2D(sceneTex, uv-0.009);

c += texture2D(sceneTex, uv-0.011);

c.rgb = vec3((c.r+c.g+c.b)/3.0);

c = c / 9.5;

gl_FragColor = c;

}

c.rgb = vec3((c.r+c.g+c.b)/3.0);

It’s not really a proper way to do color to B/W convertion. Your conversion display a B/W with a kind of purple filter.

A standard establish weights for this purpose: R * 0.33, G * 0.59, B * 0.11.

It represents the eye sensibility for each channel.

Btw, it would be better to save the colorbuffer in black and white to reduce by 3 the memory bandwise of it using a GL_RED texture. Moreover, this allows the use of the gather4 instruction to either reduce the number of texture fetch or increase the blur quality.

What do you smoke before going to bed ?